My notes from PDC Session ES 02

Notes from ES 02: Architecting Services for Windows Azure

Presentation will cover how to architect Azure services

Describe the service life cycle management

Show how azure architecture enables automation

Show how developers are freed from cloud platform issues

Main points of the presentation

• Cloud services have specific design considerations

○ Are always on, distributed, large scale, failure is expected so how to handle

• Azure is an OS for the cloud

○ Handles scale out, dynamic and on-demand.

○ Each machine is running its own kernel

○ But all the services sitting on top are what make up the Azure OS

• Azure managers services not just servers

○ Tell it what you want, and it will help automate the details

○ Servers, load balancers, etc.

○ Just describe what you want, and it auto deploys it all

• Azure frees devs from all the underlying stuff

○ Allows developers to concentrate on the business logic instead of cloud logic

They need our help building services. If we build it correctly, MS can help us automate things and make them healthy.Cloud computing is based on scale out not scale up (scaling outwards with lots of parallel computers, rather than scaling up and just beefing up a single computer with more ram)

Automation is the key to reducing costs

Design considerations

Failure of any given node is expected, so be sure to expect it and handle it

State will have to be relicated

There are no install steps. You just deploy your package to the service

Handle dynamic configuration changes in your services

Services are always running, there is no maintenance window…. So what happens when you want to upgrade/patch. So you'll have to handle rolling upgrades. As well as schema changes

Services are built using multiple nodes/roles. Document the service architecture, & the communication paths of elementsBenefits of adhering to these design points

Azure manages services not servers (so again describe what you want and it will auto provision it)

Can automate service lifecycle via model driven automation.

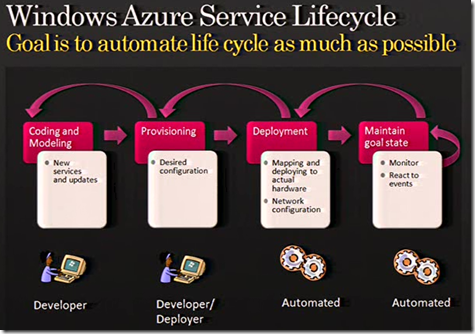

The goal is to automate lifecycle as much as possibleCoding&modeling: running on the local PC doing the development/debugging

Provisioning: setup the desired configuration. How many instances you want, etc.

From here everything is automated as much as possible:

Deployment: Azure takes your model with desired config and maps it to actual hardware and configure the network for load balancing

Maintain goal state: monitor and react to certain events like hardware/software failures. So if you request 10 frontends they will try to keep you at 10 when things fail.

Automation reduces cost Service model

Service model

Describes service as distributed entities.

It is logical description of the services. The same model is used for testing&deployment

It is a declarative composition language• Service - the service as a whole is all of the next points

• Role - Frontend (web role), backend (worker role)

• Group - groups roles

• Endpoint - role communication point

• Channel - 2 types: logical load balances and switches

• Interface

• Config settings - developer settings & system settingsFault domains

Avoid single points of failure

It is a unit of failure: compute node, rack of machines, an entire datacentre.

The system considers fault domains when allocating service roles (don't put all roles in the same rack

If you have 10 frontends, and want 2 fault domains, it will distribute over to racks with 5 in eachUpdate domains

Ensures service stays up while updating

Unit of sotware/config update. Will update a set of nodes at the same time

Used when rolling forward or backward

So we can say that we have 10 frontends with 5 update domains.Dynamic config settings

When programming for a single machine you can use the registry to store things. There isn't one here. So you need a place to communicate and store things.

Communicates settings to service roles

App config settings are: Declared by developer / set by deployer

System config settings. Pre-declared (instance id, fault domain id, update domain id) not in this CTPAzure automation

Fabric controller

• It is the bain of the system

• Consumes the model you specify, the config settings and the payload

• Controls Compute nodes, load balancers, etc.

• Monitors and provides alerts

• The Fabric controller was actually built and defined by a model itself

When you click go

1. Allocates nodes (across fault & update domains)

2. Places OS and role images on the nodes

3. Configs settings

4. Starts the roles

5. Configures load balancers

6. Maintains desired number of roles (auto restarting nodes, or moving to new node)Can add/remove capacity on the fly

Rolling service upgrades. Performed one update domain at a timeService isolation & security

Lots of services running on the same fabric. So need to isolate them all from each other.

Service can only access things it defines in it's model. Can only communicate with endpoints you define in the model

Autmatic updates of windows security patchesNetwork has redundancy built in. Services are deployed across fault domains load balancers rout traffic to active nodes onlyRoadmap

Today

• Automated deployment from bare metal

• Only a subset of the service model.

• Support changing number of instances at runtime

• Simple upgrades/downgrades

• Automated failure monitoring & hardware management

• Managed code/ASP.Net

• Running in fixed sized VMs

• External virtual Ip address per service

• In 1 USA data centre2009

• Expose more of the underlying model

• Richer life cycle management

• Richer network suport

• Multiple data centres