Using Silverlight to distribute workload to your clients

In my previous article i explained how you can distribute server side processing on Azure to scale the backend of your Silverlight applications. But what if we did a 180 could use Silverlight to distribute work out to clients instead?

The idea

For years people have been running applications on their home computers to help the greater good. They use the ‘spare CPU cycles’ of your computer to brute force an algorithm. Popular examples include:

- Seti@home (help process signals from space to search for extraterrestrial life)

- Folding@Home (help run calculations to figure out how protiens fold, to aid in dieses Alzheimer's, ALS, Huntington's, Parkinson's disease, and many Cancers)

- Distributed.net (for fun try to crack encryption algorithms)

An issue with these applications is the need for a user to install the software onto their home computers. With the increasing worries over trojans, viruses + system administrators locking down PCs it can be difficult to install these applications. So what if we used Silverlight to assist in this?

The theory

Writing algorithms that can run on a users PC is made easier due to Silverlight running .Net code, it is part of the reason it’s uptake amongst developers has been so strong. This makes it easy for us to port the calculations across that would have previously been done using a desktop client, or on a server.

Once the algorithms have been written distributing the client out to users is the easy part, this is what Silverlight is designed to do!

The server can host the assemblies that contain all the different algorithms, the Silverlight client is able load these up using MEF to load them dynamically. From there clients can request chunks of data that need to be processed. Once the the calculations have been made, the Silverlight clients send the results back to the server where all of the results are collected.

Using Silverlight also gives access to isolated storage, meaning clients can store temporary data during processing, or allow a client to continue a 2 hour processing chunk if they accidently close the browser window.

What are the benefits?

The main benefit here is that the end user doesn’t need to install anything on their computer to help out. If someone wants to help the search for aliens all they need to do is point their browser to the site, leave it open for a while and they will assist.

The Silverlight deployment model makes it easy to push updates to the users. If a more efficient algorithm is created, or the users have solved the algorithm and we would now like to direct their efforts into another problem, it is very easy to just upload new components and tell MEF to load them.

Example

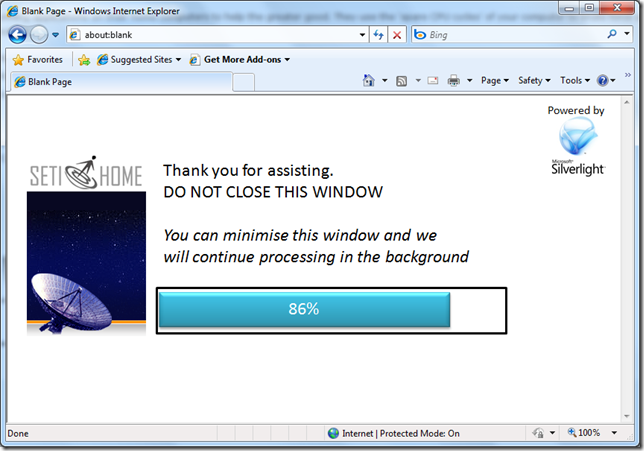

Here is a quick example I mocked up for Seti@Home.

- For a user to help, they would just need to direct their browsers to http://seti-at-home.com/silverlight

- The Silverlight client would load, MEF would immediately grab the processing module

- The Silverlight client then requests a chunk of work from the Seti@Home servers

- The Silverlight client processes the work. Saving to isolated storage as it goes and displaying the progress bar

- Once the data has been processed, the Silverlight client sends the results back to the server and requests the next block of data

- repeat steps 3-6

Final thoughts

This could be an easy way for users to help out important causes, while also simplifying the deployment and update process of the software. Here are some final pros and cons to this proposal

Pros

- Easy deployment / easy for user to help out

- You get all the power of the users pc

- Easy ways to use .net parallel frameworks to further increase performance

Cons

- Might be difficult to make the application only use idle CPU time. The application might take over the users PC and make the system start to slow down

- This could be used for malicious purposes (more on that later post)